Feature Selection with Quantum Approximate Optimization Algorithm (QAOA)

This article presents a method to perform feature selection using the Quantum Approximate Optimization Algorithm (QAOA).

QAOA is a quantum-classical hybrid algorithm designed to address combinatorial optimization problems. By leveraging the strengths of both quantum and classical computing, it aims to find efficient solutions to complex problems. In this specific application, we use QAOA to determine the most relevant features for a machine learning model, thereby optimizing its performance.

Preparing the Environment

Importing Necessary Libraries

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.feature_selection import mutual_info_classif

from qiskit import Aer

from qiskit.algorithms import QAOA

from qiskit.algorithms.optimizers import COBYLA

from qiskit.utils import algorithm_globals

from qiskit_optimization import QuadraticProgram

from qiskit_optimization.algorithms import MinimumEigenOptimizerThis code block imports various libraries required for the implementation. numpy and pandas are used for data manipulation, sklearn.model_selection for splitting the dataset, and several modules from qiskit to implement QAOA.

Loading the Dataset

df = pd.read_csv('yourdataset.csv')

data_sample = df.sample(1000)

X = df.drop(['default.payment.next.month', 'ID'], axis="columns")

y = df['default.payment.next.month']A sample of the data is taken to make the process more manageable. X and y represent the features and target variable, respectively.

Splitting the Data

n_features = 10The number of features to be selected is set to 10.

Implementing QAOA for Feature Selection

Defining the Feature Selection Problem

def create_feature_selection_problem(X, y, n_features, importance):

qp = QuadraticProgram()

for i in range(X.shape[1]):

qp.binary_var('x{}'.format(i))

linear_terms = {f'x{i}': -importance[i] for i in range(X.shape[1])}

quadratic_terms = {('x{}'.format(i), 'x{}'.format(j)): 1.0 for i in range(X.shape[1]) for j in range(X.shape[1]) if i != j}

qp.minimize(linear=linear_terms, quadratic=quadratic_terms)

qp.linear_constraint(linear=[1] * X.shape[1], sense='==', rhs=n_features)

return qpThis function constructs a quadratic program for the feature selection problem, which will be solved using QAOA.

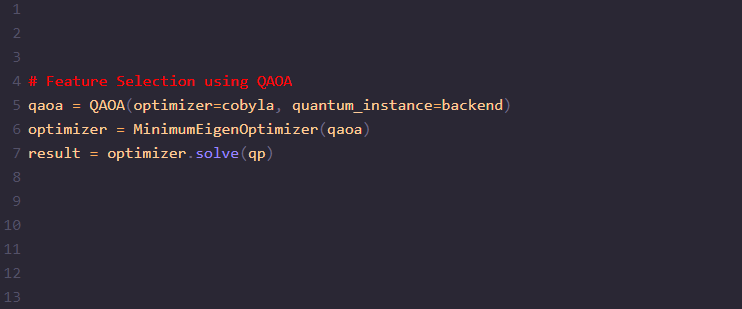

Applying QAOA

importance = mutual_info_classif(X_train, y_train)

qp = create_feature_selection_problem(X, y, n_features, importance)

backend = Aer.get_backend('aer_simulator') #simulator is used in this example

cobyla = COBYLA(maxiter=25)

qaoa = QAOA(optimizer=cobyla, quantum_instance=backend)

optimizer = MinimumEigenOptimizer(qaoa)

result = optimizer.solve(qp)

selected_feature_indices = [int(var_name[1:]) for var_name, value in result.variables_dict.items() if value == 1]

vector_names_qaoa = X.columns[selected_feature_indices]In this step, QAOA is utilized to solve the feature selection problem. The algorithm selects a subset of features based on their importance.

Creating New Datasets with Selected Features

X_train_qaoa = X_train[vector_names_qaoa]

X_test_qaoa = X_test[vector_names_qaoa]New training and testing datasets are created using the features selected by QAOA.

Displaying Selected Features

print("Selected Features using QAOA:")

for feature in vector_names_qaoa:

print(feature)The final step is to display the features that the QAOA implementation chose, which shows how the quantum-enhanced feature selection process worked.

This article demonstrates the integration of quantum computing techniques with classical machine learning workflows. By applying QAOA, it's possible to optimize the feature selection process, potentially improving the performance of machine learning models.

Some limitations can be found in the capacity to process larger datasets in terms of datapoints and features due to the simulators capacities, as well as the current limitations of quantum hardware.